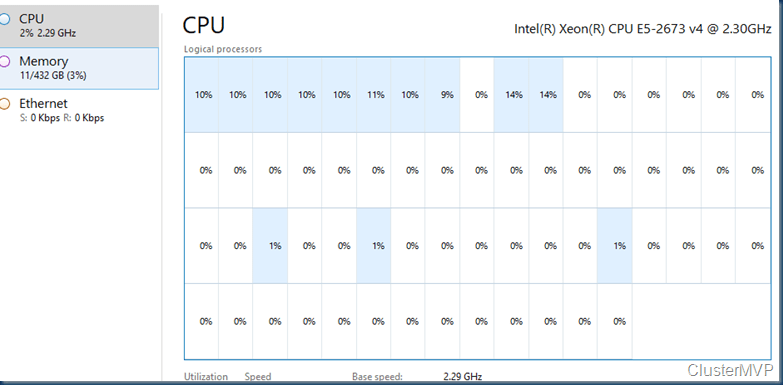

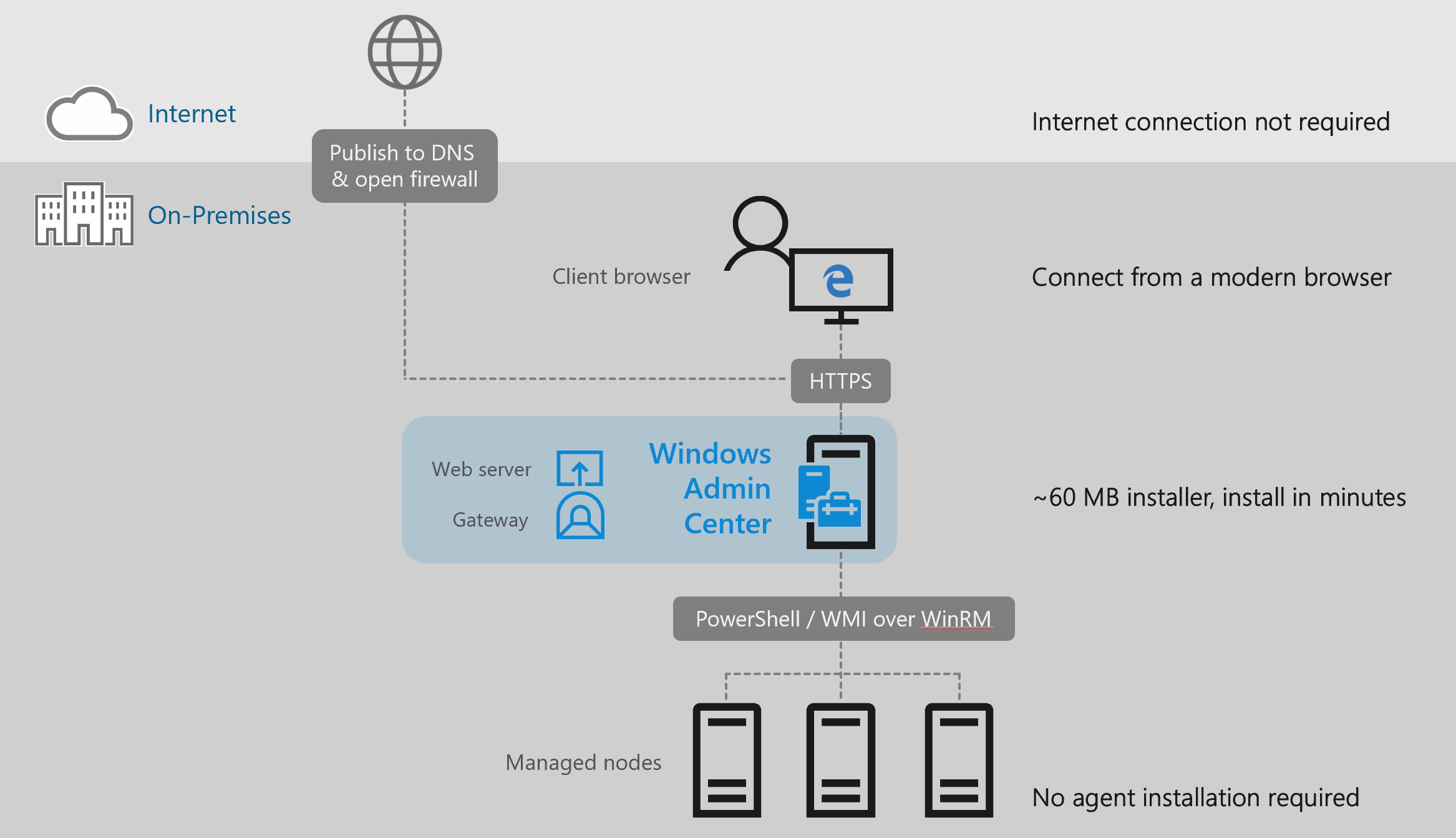

The new new tool Windows Admin Center is THE tool to use when managing your Environment you can install this on almost any server (no Domain Controller) but even this device needs a reboot from time to time. Therefor we make the Windows Admin Center High available. When installing this on a cluster the Tools gets better uptime. And is there when you need it. The resources that are used is minimal.

Windows Admin Center is a new, locally-deployed, browser-based management tool set that lets you manage your Windows Servers with no Azure or cloud dependency. Windows Admin Center gives you full control over all aspects of your server infrastructure and is particularly useful for managing servers on private networks that are not connected to the Internet.

Windows Admin Center is the modern evolution of “in-box” management tools, like Server Manager and MMC. It complements System Center – it’s not a replacement.

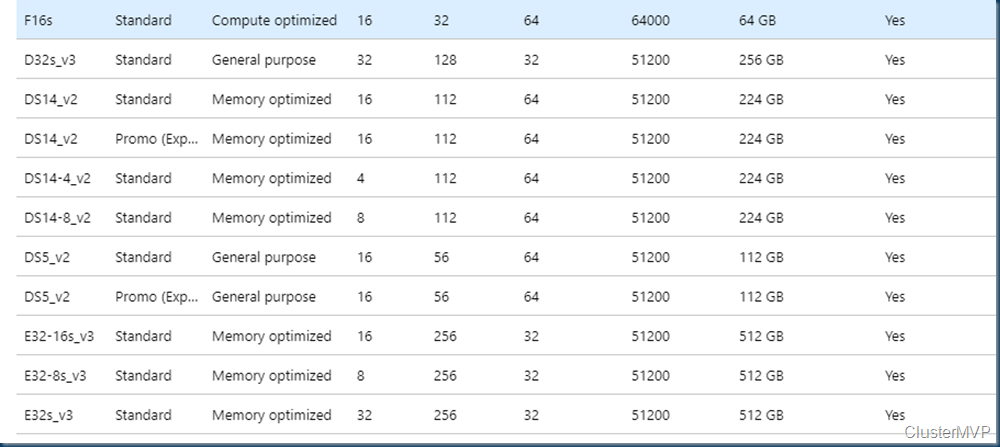

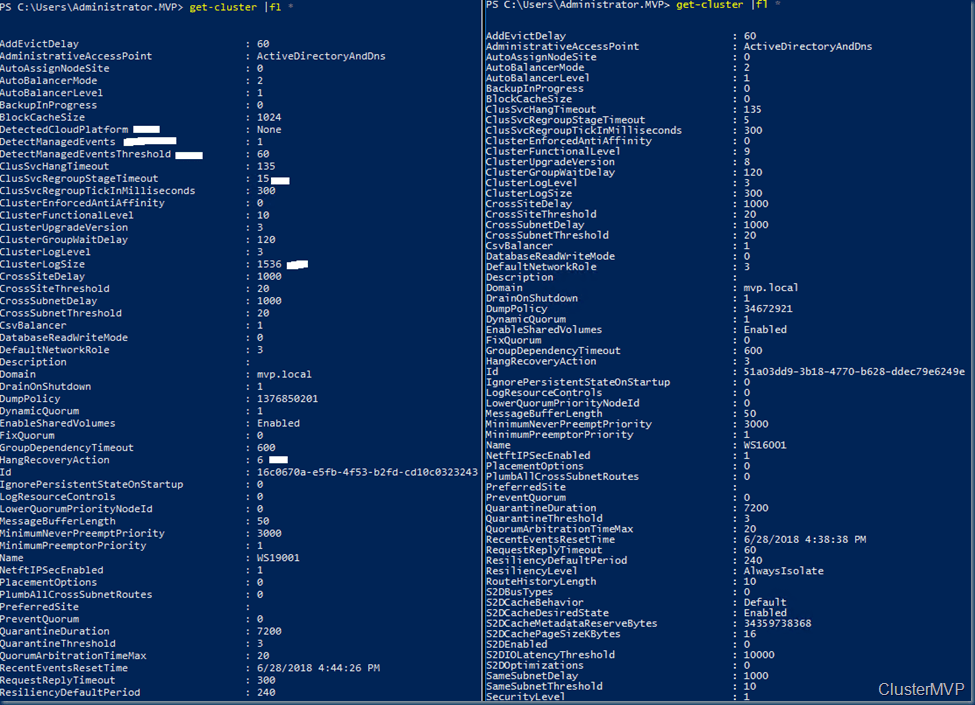

First we take our cluster, in this case my test cluster witch runs all kinds of load.

Get the latest Windows Admin center build. http://aka.ms/WACDownload

save this on the Cluster node, and remember you can’t run Windows Admin Center with IE – Internet Explorer!

When checking this I saw the Cluster team create already a Powershell Script to make the WAC HA. so there goes my blog.

Well You can run the PowerShell Scripts. But that’s no Fun. but I understand if you are busy and or you don’t want to know what is behind the script.

https://docs.microsoft.com/en-us/windows-server/manage/windows-admin-center/deploy/high-availability

In this case I do it all manual, well not all there are some good parts in the Script

First we need a Certificate, I use a self signed and yes this needs to be changed every 90 days

Fill in the Variables

$clientAccessPoint=”mvpwac03″

$tmpPassword = “Temppassword”

$certPath = “c:\temp\sme3.pfx”

#####

Write-Output “Creating self signed certificate”

$domain= (Get-WmiObject win32_computersystem).Domain

$dnsName = $clientAccessPoint+”.”+$domain

$cert = New-SelfSignedCertificate -DnsName $dnsName -CertStoreLocation “cert:\LocalMachine\My” -NotAfter (Get-Date).AddMonths(3)

$certPassword = ConvertTo-SecureString -String $tmpPassword -Force -AsPlainText

$cert | Export-PfxCertificate -FilePath $certPath -Password $certPassword | Out-Null

Import the Certificate

$cert.Import($certPath, $certPassword,’DefaultKeySet’)

Save the Thumbprint for later

$cert.Thumbprint

Now We are installing the Windows Admin Center , I use a preview but get the latest version here http://aka.ms/WACDownload

Don’t forget the trusted site checkbox.

Here is the Thumbprint that is used in the Certificate , Next is installing the Windows Admin Center.

Now that Windows Admin Center is installed We are almost ready.

The next steps are stopping the services and set this to manual as the Failover Cluster Manager controls the run status

Set-Service ServerManagementGateway -startuptype “manual”

Stop-Service ServerManagementGateway

Now that this is ready we need to think about the file location as this is currently on the C drive.

And we don’t want to have two or more configurations. there for we place this on the CSV volume.

Copy all the file into the CSV volume folder

When this is done we are adjusting the Services.

$staticAddress=”10.255.255.222″

$portNumber=”443″

$smePath=”C:\ClusterStorage\vdisk20\ux”

$certThumbprint=”1D243A17BBD62271DA0DF8EE4FF0FD65C5AE5A37″

$clientAccessPoint=”mvpwac03″

$registryPath = “HKLM:\Software\Microsoft\ServerManagementGateway\Ha”

New-ItemProperty -Path $registryPath -Name IsHaEnabled -Value “true” -PropertyType String -Force | Out-Null

New-ItemProperty -Path $registryPath -Name StoragePath -Value $smePath -PropertyType String -Force | Out-Null

New-ItemProperty -Path $registryPath -Name Thumbprint -Value $certThumbprint -PropertyType String -Force | Out-Null

New-ItemProperty -Path $registryPath -Name Port -Value $portNumber -PropertyType DWord -Force | Out-Null

New-ItemProperty -Path $registryPath -Name ClientAccessPoint -Value $clientAccessPoint -PropertyType String -Force | Out-Null

$staticAddressValue = $staticAddress -join ‘,’

New-ItemProperty -Path $registryPath -Name StaticAddress -Value $staticAddress -PropertyType String -Force | Out-Null

New-ItemProperty -Path HKLM:\Software\Microsoft\ServerManagementGateway -Name InstallDir -Value $smePath -PropertyType String -Force | Out-Null

New-ItemProperty -Path HKLM:\SYSTEM\CurrentControlSet\Services\ServerManagementGateway -Name ImagePath -Value $smePath\sme.exe -PropertyType String -Force | Out-Null

#grant permissions to Network Service for the UX folder

$Acl = Get-Acl $UxFolder

$sID = New-Object System.Security.Principal.SecurityIdentifier(“S-1-5-20″)

$Ar = New-Object system.security.accesscontrol.filesystemaccessrule($sID,”FullControl”,”ContainerInherit,ObjectInherit”,”None”, “Allow”)

$Acl.SetAccessRule($Ar)

Set-Acl $UxFolder $Acl

After running this the path changed to the CSV location and the HA values are there

The Windows Admin Center HA values are there.

The last step on this cluster node is creating the cluster resource.

$staticAddress=”10.255.255.222″

$clientAccessPoint=”mvpwac03″

$registryPath2 = “SOFTWARE\Microsoft\ServerManagementGateway\Ha”

Add-ClusterGenericServiceRole -ServiceName ServerManagementGateway -Name $clientAccessPoint -CheckpointKey $registryPath2 -StaticAddress $staticAddress

Remember the HA cluster resource does not need the HKLM:\ as in the other variables.

Now we have installed the Windows Admin Center in the Cluster. For all the other nodes in the cluster we need to do almost the same.

Or we could export the Register keys and add them in the nodes as we already placed the files on the CSV and created a cluster resource.

All the other nodes don’t have a Windows Admin Center services. Using the register keys is working but you will need a reboot. As we create first a fake services and then place the regkeys no reboot is needed. Or just import the register keys and do a reboot of the node.

New-Service -Name ServerManagementGateway -DisplayName “Windows Admin Center” -BinaryPathName “C:\ClusterStorage\vdisk20\ux”

First regkey <>

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\ServerManagementGateway]

“Version”=”1.1.24609.0”

“InstallDir”=”C:\\ClusterStorage\\vdisk20\\ux”

“SmePort”=”443”

“UseHttps”=”1”

“DevMode”=””

“DataEncryptionCertificateThumbprint”=”83D5FBC5AD758C2B23BADBD83117AC5EAA0E8F2B”

“RedirectPort80″=”1”

[HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\ServerManagementGateway\Ha]

“IsHaEnabled”=”true”

“StoragePath”=”C:\\ClusterStorage\\vdisk20\\ux”

“Thumbprint”=”1D243A17BBD62271DA0DF8EE4FF0FD65C5AE5A37”

“Port”=dword:000001bb

“ClientAccessPoint”=”mvpwac03”

“StaticAddress”=”10.255.255.222”

Second Regkey <>

Windows Registry Editor Version 5.00

[HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Services\ServerManagementGateway]

“Type”=dword:00000010

“Start”=dword:00000003

“ErrorControl”=dword:00000001

“ImagePath”=”C:\\ClusterStorage\\vdisk20\\ux\\sme.exe”

“DisplayName”=”Windows Admin Center”

“ObjectName”=”NT Authority\\NetworkService”

“Description”=”Windows Admin Center”

“FailureActions”=hex:80,51,01,00,00,00,00,00,00,00,00,00,03,00,00,00,14,00,00,\

00,00,00,00,00,d0,07,00,00,00,00,00,00,d0,07,00,00,00,00,00,00,00,00,00,00

“Environment”=hex(7):43,00,4f,00,4d,00,50,00,55,00,54,00,45,00,52,00,4e,00,41,\

00,4d,00,45,00,3d,00,4d,00,56,00,50,00,57,00,41,00,43,00,30,00,33,00,00,00,\

5f,00,43,00,4c,00,55,00,53,00,54,00,45,00,52,00,5f,00,4e,00,45,00,54,00,57,\

00,4f,00,52,00,4b,00,5f,00,46,00,51,00,44,00,4e,00,5f,00,3d,00,4d,00,56,00,\

50,00,57,00,41,00,43,00,30,00,33,00,2e,00,6d,00,76,00,70,00,2e,00,6c,00,6f,\

00,63,00,61,00,6c,00,00,00,5f,00,43,00,4c,00,55,00,53,00,54,00,45,00,52,00,\

5f,00,4e,00,45,00,54,00,57,00,4f,00,52,00,4b,00,5f,00,48,00,4f,00,53,00,54,\

00,4e,00,41,00,4d,00,45,00,5f,00,3d,00,4d,00,56,00,50,00,57,00,41,00,43,00,\

30,00,33,00,00,00,5f,00,43,00,4c,00,55,00,53,00,54,00,45,00,52,00,5f,00,4e,\

00,45,00,54,00,57,00,4f,00,52,00,4b,00,5f,00,4e,00,41,00,4d,00,45,00,5f,00,\

3d,00,4d,00,56,00,50,00,57,00,41,00,43,00,30,00,33,00,00,00,00,00

With this in place all nodes can run Windows admin center in HA mode, but it will not run on IE. and this is the only default browser on the server. To test if it is working you will need Edge or Chrome.

As you can see it is not that simple to make things high available. Using the Powershell scripts provided by microsoft But if using these script you need to rename the MSI file if you are using the insiderspreview or any other build that is not named as ServerManagementGateway.msi

WindowsAdminCenterPreview1808.msi rename to ServerManagementGateway.msi

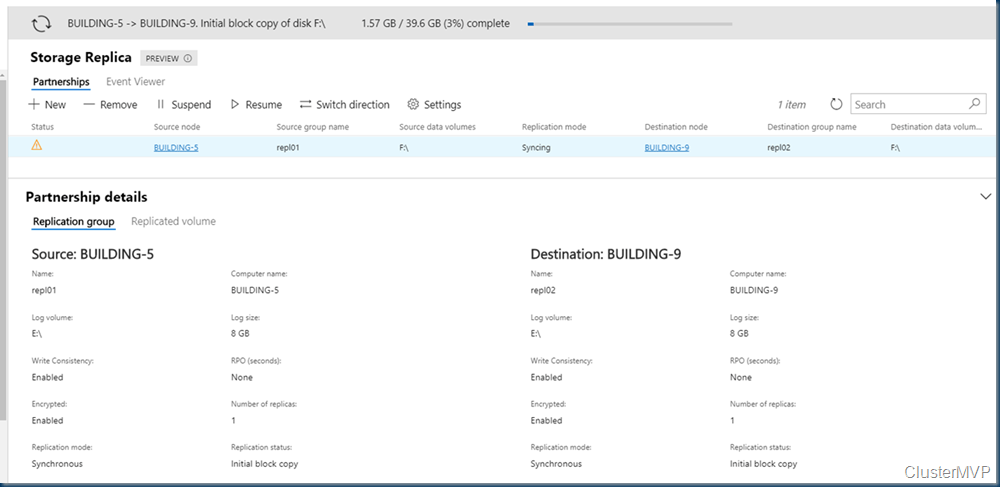

You can deploy Windows Admin Center in a failover cluster to provide high availability for your Windows Admin Center gateway service. The solution provided is an active-passive solution, where only one instance of Windows Admin Center is active. If one of the nodes in the cluster fails, Windows Admin Center gracefully fails over to another node, letting you continue managing the servers in your environment seamlessly.

High-availability deployment scripts from Windows Admin Center HA Setup Scripts zip file. Download the .zip file containing these scripts to your local machine and then copy the scripts as needed.

Follow Me on Twitter @ClusterMVP

Follow My blog https://robertsmit.wordpress.com

Linkedin Profile Robert Smit MVP Linkedin profile

Google : Robert Smit MVP profile